- RDD is the spark's core abstraction.

- Full form of RDD is resilient distributed dataset.

- That means it is immutable collection of objects.

- Each RDD will split into multiple partitions which may be computed in different machines of cluster

- We can create Apache spark RDD in two ways

- Parallelizing a collection

- Loading an external dataset.

- Now we sill see an example program on creating RDD by parallelizing a collection.

- In Apache spark JavaSparkContext class providing parallelize() method.

- Let us see the simple example program to create Apache spark RDD in java

Program #1: Write a Apache spark java example program to create simple RDD using parallelize method of JavaSparkContext.

- package com.instanceofjava.sparkInterview;

- import java.util.Arrays;

- import org.apache.spark.SparkConf;

- import org.apache.spark.api.java.JavaRDD;

- import org.apache.spark.api.java.JavaSparkContext;

- /**

- * Apache spark examples:RDD in spark example program

- * @author www.instanceofjava.com

- */

- public class SparkTest {

- public static void main(String[] args) {

- SparkConf conf = new SparkConf().setMaster("local[2]").setAppName("InstanceofjavaAPP");

- JavaSparkContext sc = new JavaSparkContext(conf);

- JavaRDD<String> strRdd=sc.parallelize(Arrays.asList("apache spark element1","apache

- spark element2"));

- System.out.println("apache spark rdd created: "+strRdd);

- /**

- * Return the first element in this RDD.

- */

- System.out.println(strRdd.first());

- }

- }

- }

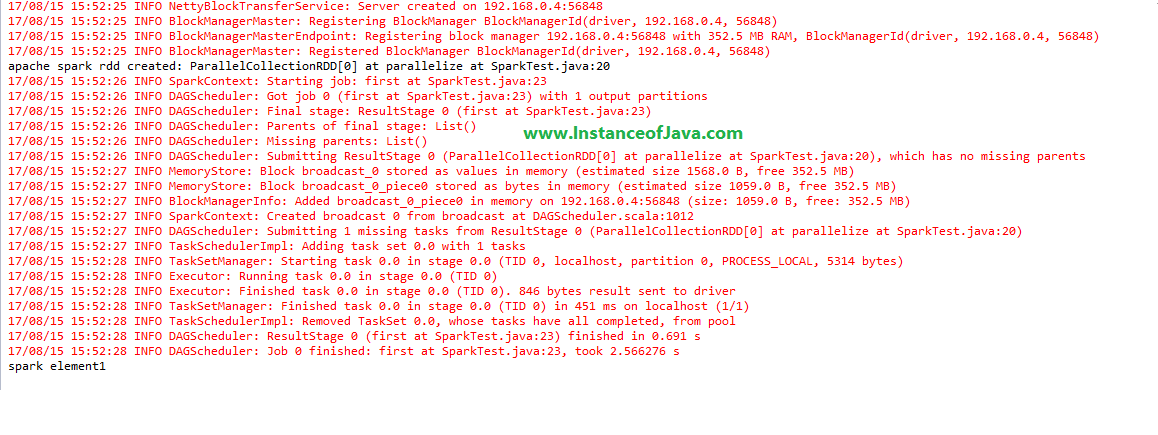

Output:

- apache spark rdd created: ParallelCollectionRDD[0] at parallelize at SparkTest.java:21

- spark element1

No comments